For many Institutional Effectiveness (IE) and Institutional Research (IR) teams, the word “accreditation” triggers a familiar sense of dread. It signals the start of the “Great Data Scramble”—a frantic, months-long process of emailing department heads, hunting down spreadsheets, and piecing together fragmented evidence to prove your institution is meeting its standards.

While accreditation is meant to foster continuous improvement, the process of reporting often feels like a massive distraction. The sheer burden of data collection—pulling numbers from your Student Information System (SIS), Learning Management System (LMS), and financial software—can paralyze a team.

If you are spending 80% of your time collecting data and only 20% analyzing it, the balance is wrong. It’s time to talk about why the old way of reporting is broken and how Unified Data offers a path out of the weeds.

The Hidden Cost of the “Template Trap”

The most common tool for accreditation reporting is still, unfortunately, the static spreadsheet. You likely have a folder full of “Common Data Set” templates or custom Excel files that you email to the Registrar, the Provost, and the CFO, hoping they fill them out correctly.

While these templates provide a structure, they create three major burdens:

The Version Control Nightmare

We have all seen it: Enrollment_Data_Final_v3_ACTUAL.xlsx. When you rely on emailed templates, you aren’t managing data; you are managing files. Reconciling conflicting numbers from different stakeholders becomes a full-time job.

The “Snapshot” Fallacy

Accreditation bodies like HLC, SACSCOC, and NECHE increasingly demand evidence of continuous improvement. A static template captures a snapshot of your institution from six months ago. By the time the visiting team arrives, that data is stale, making it difficult to answer real-time questions about student success trends.

Moving From “Collection” to “Connection”

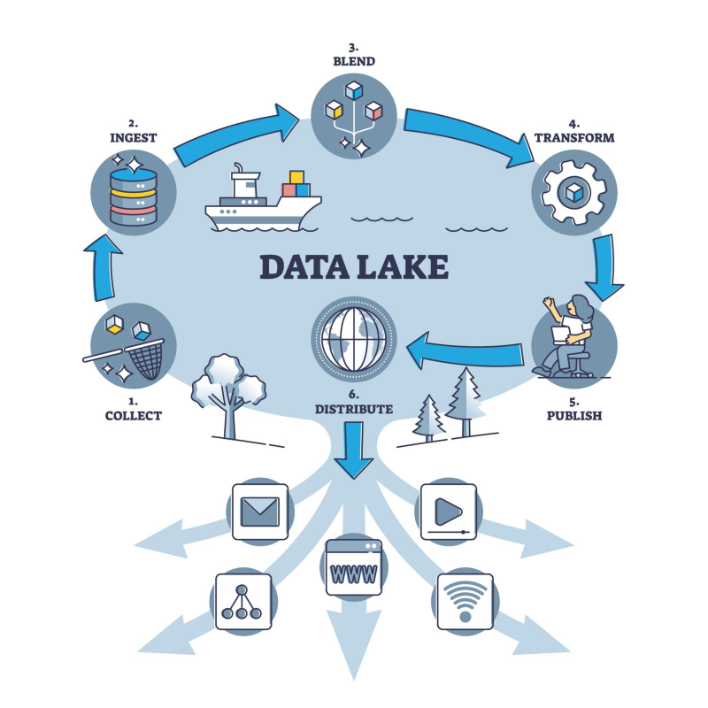

To lift the burden of accreditation, institutions need to stop asking “Who has this spreadsheet?” and start asking “How does our data flow?”

The solution lies in Unified Data. Instead of manually harvesting data every ten years (or every reporting cycle), modern institutions are building “always-on” data lakes. When your data is unified, accreditation metrics—like retention rates, faculty credentials, and financial health ratios—are calculated automatically and continuously.

How Datatelligent Automates the Evidence Room

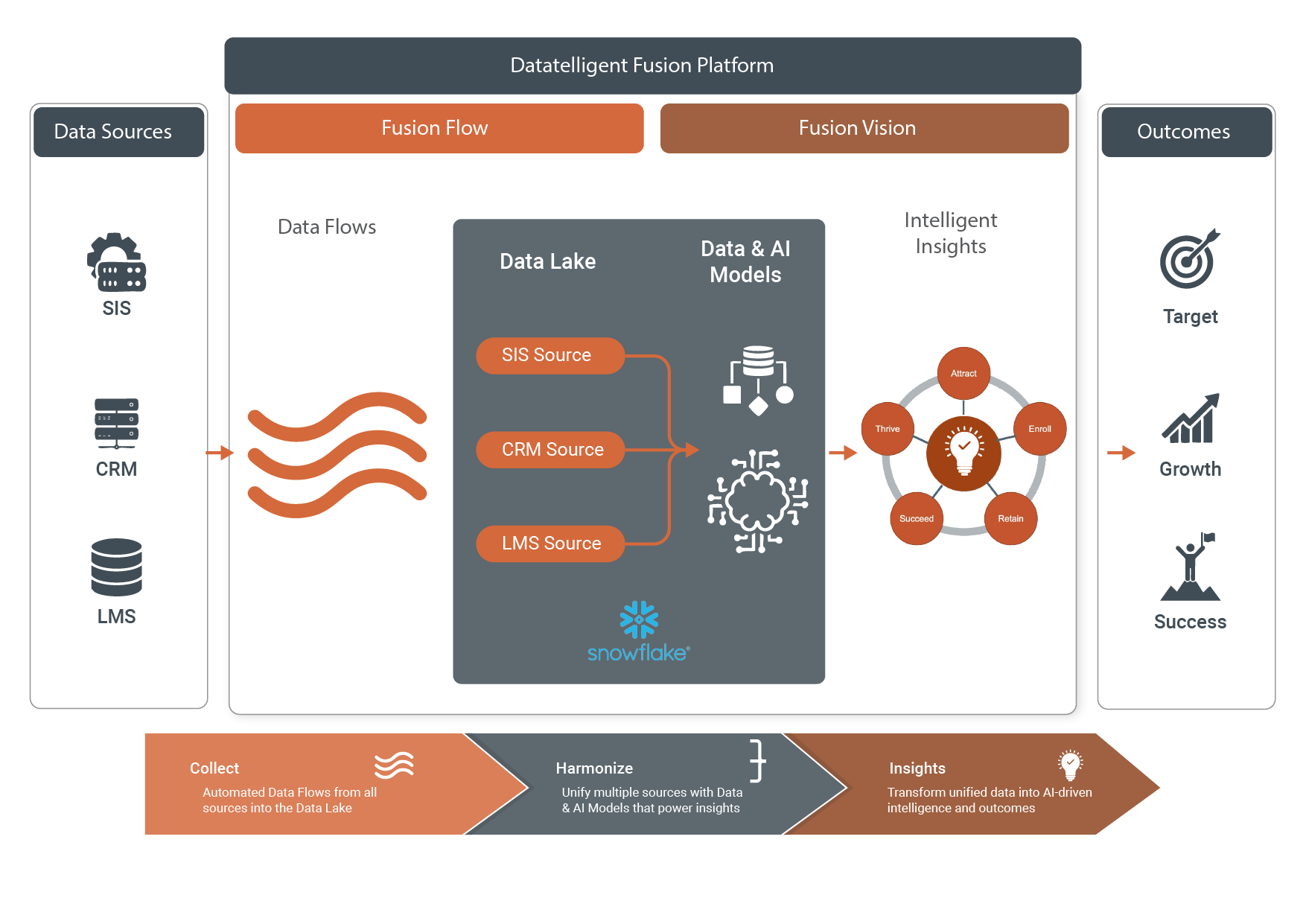

At Datatelligent, we believe that compliance shouldn’t come at the cost of your sanity. That’s why we built the Fusion Platform to automate the heavy lifting of higher ed data.

Automate Ingestion with Fusion Flow

The biggest bottleneck in accreditation is getting the data out of your siloed systems. Fusion Flow orchestrates the collection of data from every source—your SIS, CRM, LMS, and more—and automates the ingestion into a centralized Data Lake. No more manual exports; the data is simply there, ready for analysis.

Visualizing Compliance with Fusion Vision

Once your data is harmonized, Fusion Vision turns that raw information into intelligent insights. Imagine an “Accreditation Dashboard” that updates daily, allowing you to monitor your Key Performance Indicators (KPIs) year-round. When the self-study rolls around, you don’t need to scramble for evidence; you just point to the dashboard.

Free Tools to Get Started

We know that unified data is a journey. To help you benchmark your current standing, we offer resources like our Free IPEDS Comparison Tool. It’s a great example of how visualizing public data can save you hours of manual cross-referencing.

Turn Compliance into Strategy

Accreditation shouldn’t be a burden you survive; it should be an opportunity to thrive. By shifting from manual templates to a unified data platform, you can give your team the time they need to focus on what matters most: using that data to drive student success.

Explore the Fusion Platform and see how we unify your data ecosystem.